At ITQ, we like to experiment with technology and push the limits of what’s possible. If we present sessions about the Art of the Possible, in our case it’s also actually based on what we have built. We have a passion for technology and the combination of that passion and the drive to always get the most out of a solution, product or technology sometimes has amazing results. The F1 simulator we built last year was such a project. Something that doesn’t make any sense (because why would anyone run an F1 simulator in a virtual desktop), but it does show where VDI is capable of.

I have traveled the world last year to present about the project and the results, show the demo and spread the VDI word

The idea for the project came from the fact that we wanted to show what the power of VDI could be in combination with vGPU/VMware Horizon and USB redirection at different IT conferences. The Belgium VMUG being the first (mid-June 2018). The first idea was to play a soccer game like Fifa 18, but since The Netherlands wasn’t joining the World Cup in 2018 and Belgium was, we had to come up with something else. Max Verstappen was doing really good in the Grand Prix, so an F1 game like F1 2017 was the perfect alternative. The Q in ITQ stands for Quality, and so the simulator had to be based on top-quality products. After running a successful proof-of-concept with the game, VMware Horizon 7.5, an NVIDIA Tesla P4, my (previous) Supermicro homelab, and a keyboard used as a controller, we decided to order everything we needed to build a 2-player set up.

The following items were used in the “production” kit:

- A Supermicro-based host containing:

- Intel Xeon E5-2620 V2 @ 2,2GHz

- 128 GB RAM

- Samsung 850 PRO SSD

- NVIDIA Tesla P4 (including the custom cooling solution)

- VMware Horizon 7.5 and vSphere 6.5 and Blast Extreme

- NVIDIA GRID vGPU 7.0

- A virtual desktop VM running:

- Windows 10 x64 1607 (since newer versions had USB redirection issues)

- A P4-4Q vGPU profile (since 4GB of Framebuffer were enough)

- A single monitor running on 1080p

- Steam and F1 2017

- An ASUS gaming PC with an Intel Core i5-7300HQ and a Geforce GTX 1060 (to run the game natively and compare the differences with the virtual desktop)

- An Atrust T180W Thin Client (sponsored by ThinClientSpecialist)

- 2 x Logitech G920 Force Feedback Steering Wheels

- 2 x a Playseat Sensation Pro with Ilyama 40 gaming monitor

A single kit looked like this:

Ok, let’s build!

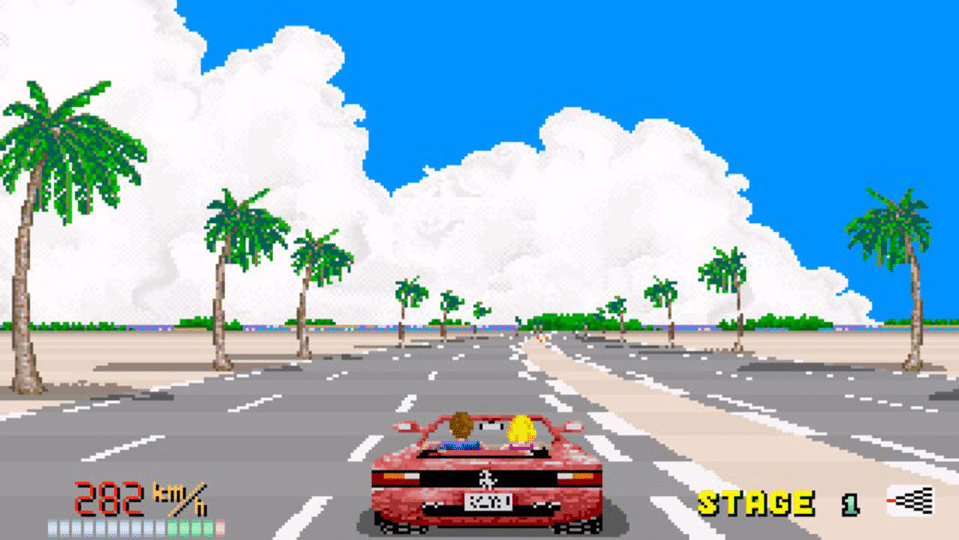

Of course, as a geek, it’s quite unusual to read manuals. You just start building stuff and expect that it works immediately. And as you might guess, that didn’t happen. The result after the first deployment, was an experience that felt like playing video games in 1990 while being drunk.

The symptoms were as follows:

- 15 ms round trip latency between endpoint and virtual desktop

- 4 vCPUs permanently running at 95% – 98%

- 4 GB of framebuffer used

- GPU usage as 70%

- 60 Frames per Second

After we switched to PCoIP, the User Experience even got worse.

The thing you need to know, is that a game like F1 2017 is of course not built to run in a VM. It fully utilizes a CPU and the GPU, if it can. That means that running it on top of a hypervisor might generate latency that doesn’t exist when running the same game on bare metal. Also, when playing the game with a GPU directly attached to a monitor, the FPS can easily exceed 120 FPS without any noticeable latency. When transfering rendered images over the network to a Thin Client, it’s like trying to cramp something really thick through a funnel. Another thing is that a Logitech G920 stearing wheel isn’t necessarily capable of being redirected through a channel in Blast Extreme.

Troubleshooting galore

Since we thought it might be related to CPUs, we did the following to try to resolve it:

- Added 2 additional vCPUs

- CPU usage still remained at 95% – 98%

- GPU usage didn’t increase

- Set CPU reservations for the virtual desktop

- The latency decreased a bit

- It had (almost) no effect on GPU and CPU usage

- We replace the CPU with a faster one: An Intel Xeon E5-2680 V2 @ 2,8 GHz

- The GPU usage increased dramatically to 95%

- CPU usage decreased a bit, to 70% – 80%

- As a result, latency dropped, but the image quality still wasn’t the same as the gaming PC

- We adjusted some of the Blast Extreme parameters

- We forced the virtual desktop to use TCP instead of UDP (which reduced frame drops and increased the frame rate)

- We made sure the H.264 encoder was used (instead of JPG/PNG, which lowers the latency)

- We adjust the minQP to 10 and the maxQP to 26. These are the preferred settings as a starting point when aiming for quality over lower bandwidth consumption. Check out this post for more info about QP values

After all these adjustments (with a bit of help of GPU guru Jared Cowart), we finally managed to get the thing up and running and have it run really smooth without any noticeable differences. Check out the following video to check out the differences yourself:

And sure, you might not believe me because you haven’t seen the actual Thin Client and backend running. Luckily, I have another video with Brian Madden, test driving the setup:

Conclusion

With this successful showcase for VDI capabilities in combination with a GPU, we wanted to show the real power of VDI. It’s a solution capable of running apps and use peripherals that aren’t built for a virtual desktop (but then again, what app or peripheral is?  ).

).

The performance of the game was quite comparable between the virtual desktop and the gaming pc with the tested resolution of 1080p. Interesting is that the GPU usage increased as soon as faster CPUs were added to the ESXi host. This (of course) is due to the fact that the CPU needs to be able to keep pace with the GPU to deliver data to be calculated.

The post How to build an F1 simulator game in a VDI appeared first on vHojan.nl.

The original article was posted on: vhojan.nl