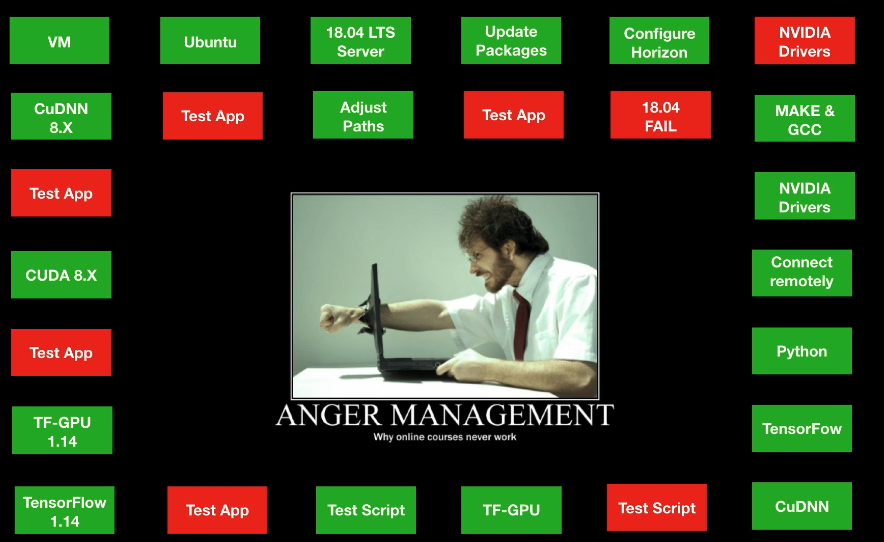

In this previous post, I described the first attempt to build a VDI platform for Deep Learning Applications. This post aggregates the information I used to build Linux Virtual Desktops for AI and Data Science. It’s a live-blog, which means I will update it when the process changes.

Building and Provisioning the desktop

The first step is to fully set up Horizon to use Linux Desktops. The following guide is quite thorough:

Set up docker

Setting up docker isn’t that hard. There are just a couple of things to take into account when installing (NVIDIA) Docker on a virtual desktop. The first step is to install docker and NVIDIA docker:

Docker installation: https://github.com/NVIDIA/nvidia-docker/issues/913

After the docker installation, the horizon agent needs to be adjusted:

Adjusting Horizon Agent after docker installation: https://communities.vmware.com/thread/597866

Deploying a container

In case you need to install a different CUDA version, check it out here:

CUDA installation for Ubuntu: https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#system-requirements

Selecting the proper CUDA runtime for Docker: https://github.com/NVIDIA/nvidia-docker/issues/861https://github.com/NVIDIA/nvidia-docker/wiki/CUDA#requirementshttps://askubuntu.com/questions/917356/how-to-verify-cuda-installation-in-16-04

Finally, you can pull a container from the NGC cloud:

Using NGC containers with vGPU: https://docs.nvidia.com/ngc/ngc-vgpu-setup-guide/

The post Mastering voodoo: Building Linux VDI for Deep Learning workloads, Part 2 appeared first on vHojan.nl.

The original article was posted on: vhojan.nl