For many years, ITQ has been on the forefront of bringing Artificial Intelligence with its subsets Deep Learning and Machine Learning to our customers. As we are just one of a handful global partners who developed dedicated services for both NVIDIA and VMware (and Pivotal, prior to the VMware acquisition), we have combined the knowledge of virtual datacenters (VMware), computational and graphical acceleration (NVIDIA), and modern application development (Pivotal, now VMware Tanzu) to help our customers to consume AI frameworks. Designing, deploying, and maintaining such an AI stack has been a big challenge for customers, since the different technologies weren't really integrated. Sure, you could spin up a linux VM on a vSphere cluster, attach a GPU, and run a docker container on it, but quite often it took a multilple weeks or months even, before a platform was ready to integrate with applications. We were very excited to see that our partners NVIDIA and VMware announced their own partnership to simplify the process of bringing AI to the Enterprise during VMworld in 2020 with Project Monterey. This week, we are thrilled to see that they are taking that partnership to the next level and launch NVIDIA AI Enterprise. This post explains what it is and how you as a customer can benefit from this extensive integration.

What are Artificial Intelligence, Machine Learning, and Deep Learning?

To understand what AI is, and how Machine Learning and Deep Learning work, please check the following video we recorded with Jits Langedijk from NVIDIA:

To understand why AI frameworks require such a complex infrastructure, check out the following light board video:

So you see, building a platform which can run Deep Learning frameworks such as TensorFlow, is quite complex. It requires many different aspects to take into account, which introduces a lot of administrative overhead.

How is NVIDIA AI Enterprise going to solve this?

One the challenges most organizations face, is the gap between datascience teams and the infrastructure teams. If the data science team want to run a new training job and requires a large number of GPUs to do so, the infra team quite often has a hard time provisioning them on such a short notice. Also, infra teams still treat data science teams as developers, which they are not. Sure, they are able to write code, but quite often they don't use complete CI/CD pipelines, and hardly know what docker container is. A DevOps team could help both of them, but they didn't have the proper tools to orchestrate GPU-accelerated containers.

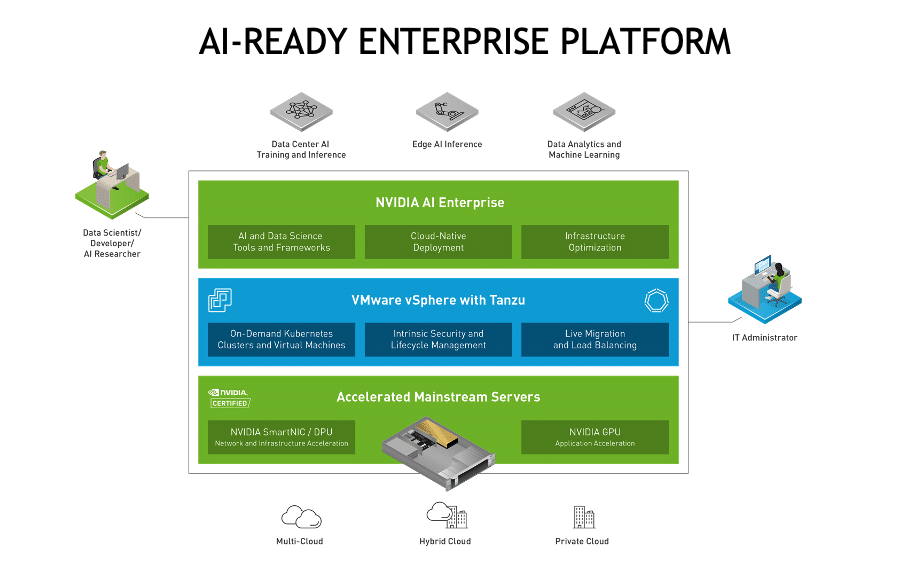

This all has changed now that NVIDIA and VMware have partnered to offer a fully standardized AI stack that runs on the following components:

- VMware vSphere with full support for GPUs such as the NVIDIA A100

- GPU support for containers running on VMware Tanzu

- Automated workflows to integrate NVIDIA GPU Cloud (NGC) into the VCF-powered VMware Tanzu platform, accelerated with NVIDIA GPUs and GPU software (NVIDIA vGPU or NVIDIA MIG)

VMware vSphere with VMware Tanzu could already simplify the deployment of modern applications, which enables developers to do what they are best at: write and push code to a platform that automates the process of bringing that code to production.

With the integration between VMware vSphere, VMware Tanzu, and NVIDIA AI Enterprise, NVIDIA and VMware are now enabling data scientists as well to do what they do best: analyze data by creating algorithms and training jobs.

The following figure shows the NVIDIA AI Enterprise stack:

Now, one thing that stands out, is that NVIDIA doesn't only offer GPUs, but also offers SmartNICs as part of the solution. Just like an indefinite thirst for GPUs, the demand for fast networks with low latencies is also increasing. Take one of our customers (Netherlands Cancer Institute as an example). Where a single microscopy image could be 10-15 Megabytes 20 years ago, it's quite common for a single image to be multiple Terabytes in 2021. When training a model based on a dataset with multiple of these images, you can imagine that fast networks are essential to keep the GPUs to work. This is where NVIDIA's SmartNICs enable customers to get the most of their datascience workflows.

How can ITQ help you?

Consuming a platform like NVIDIA AI Enterprise solves a lot of issues when talking orchestration, enabling IT to simply the provisioning of GPU resources, but migrating your datascience workflows to NVIDIA AI Enterprise can be a challenge. Integrating those workflows in CI/CD pipelines, introduce the proper way of versioning, and enabling them to work with docker containers is essential in getting the most out of the platform. This is how we can help. We are very experienced in designing and deploying all of the individual layers of the stack and the integration of all of them.

If you like to know more about NVIDIA AI Enterprise, VMware vSphere, VMware Tanzu or the NVIDIA NGC platform, feel free to reach out. We are always happy to jump on a call or drink a cup of coffee while talking AI.