My colleague Christiaan Roeleveld wrote an interesting piece on Facebook’s Open Compute Project. Chris wrapped up the article with the following statement:

"So I suspect there will also be a lot of other manufacturers jumping in to build these innovative new solutions. Whether the big vendors participate or not, I think OCP will be a huge success and it will drive down server prices dramatically."

I decided to do some additional research because the whole idea of OCP really interested me. I came across Intel’s "take" on OCP and it’s vision for future datacenters: hyperscale datacenters using - what Intel calls - Rack Scale Architecture. Intel showed a working rack using this new reference architecture on IDF13 but I completely missed this.

Rack Scale what?

So what is a hyperscale datacenter and what is a Rack Scale Architecture? As the name suggests, hyperscale datacenters are datacenters that have the ability to scale out immensely and with incredible ease. Workloads increase exponentially so the datacenter has to be able to scale to match these new demands. Intel’s vision for these hyperscale datacenters is layed out in three steps: Today, next and future.

- Today

With today’s technology physical aggregation is possible. This means systems share power and cooling facilities and rack management is aggregated. - Next

The next step is fabric integration. This means a centralized rack fabric, ultra high speed optical interconnects and modular refresh. - Future:

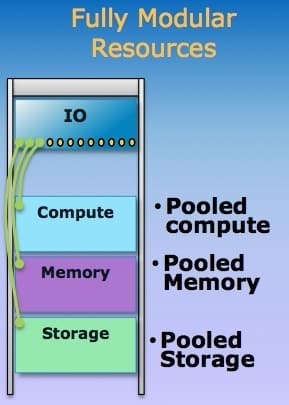

In the future Intel envisions fully modular resources in a rack. The compute, memory, storage and IO resources are segregated and pooled at the physical layer.

The future looks modular

I would like to dive a bit deeper in Intel’s vision for the future: fully modular racks. When you come to think of it, what we are doing with hypervisors is a bit strange actually. We take a whole bunch of compute, storage and IO resources (CPU, Memory, SSD, HDD, I/O). We physically separate and split these resources and put them in separate boxes. Using hypervisors we try to bring these resources back together in a logical pool of resources which we eventually distribute to our workloads as needed. With Rack Scale Architecture this pooling of resources is actually done at the physical layer. RSA brings a couple of obvious advantages over the traditional approach:

- The first advantage is the ability to optimize the hardware for a given workload. You can provision more CPU cores to compute-intensive workloads or provision more IO resources to background applications with lots of server-to-server traffic. Mind you that this is done at the physical layer.

- Another big plus is the ability to easily craft or compose servers out of the pooled resources. Define some CPU, RAM, Storage and IO resources and you can have brand new server up and running in no time.

- The last advantage I would like to point out is component-level upgrades. Upgrading the network is done by just swapping out the network modules. There is no need to take out entire server enclosures.

Breaking down an RSA rack

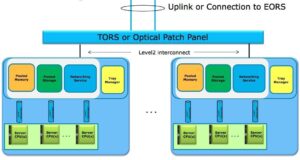

When we break down the several hardware layers of the Rack Scale Architecture we can see, as the name suggests, that the unit of scaling is the rack itself. The Management Domain aggregates racks together in a pod. The rack itself consists of trays, which contain memory, storage, network modules or compute modules. A tray manager will manage all these components and everything is interconnected using photonics and switch fabrics.

What about virtualization?

Does RSA mean the days of server virtualization are coming to an end? Quite the opposite! This is another huge step towards a fully software defined datacenter. Using orchestration software to craft servers when needed, Software Defined Networking to provision L2 to L7 network services on x86 technology, Software Defined Storage, et cetera. Physically pooling and sharing of resources also brings new opportunities for virtualization. Think of how insanely fast a vMotion will be when two servers can share the same memory blocks (courtesy of Memory Sharing and the Pooled Memory Controller). Of course operating systems and hypervisors will need to be modified to support these new technologies because RSA fundamentally uses physical resources in a different way but the future looks very promising!

I have to say that I completely agree with Chris that our datacenters will be completely different in the (near) future! Intel actively participates in the Open Compute Project and is driving alignment of RSA with OCP. Very interesting times!

There is a lot of technical detail I had to leave out of this introductory blogpost. If you are looking for the nitty-gritty technical details I highly recommend reading the following SF13 technical slide deck: